Table of Contents Show

Introduction

Dangers of AI, risks of AI, threats of AI, No matter what we call them, are those real? Since the launch of OpenAI’s ChatGPT, these concerns have been rising. These days, it’s not unusual to interact with a robot, chatbot, or virtual assistant. The astonishing developments in Artificial Intelligence (AI) are increasing daily. Even though we are excited about the future of automation, we must remember that with great power comes great responsibility. The risks of artificial intelligence include losing human jobs and making machines that are smarter than humans. As we push deeper into the frontiers of AI, we must pause and consider the potential dangers we face. This article will discuss the negative aspects of AI and how we can stop them from becoming a reality.

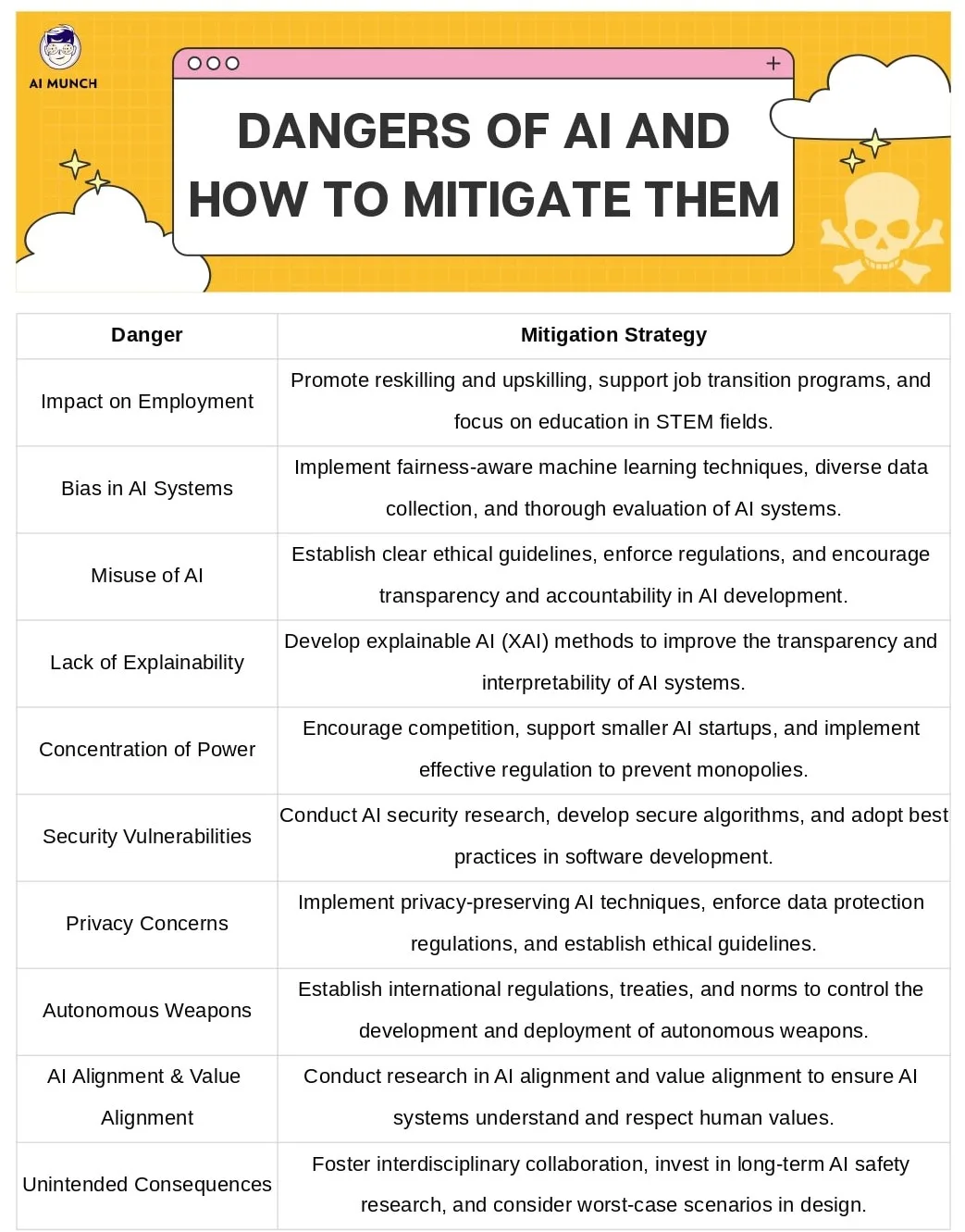

Dangers of AI and how to mitigate them

Even after the launch of OpenAI’s GPT models, Sam Altman, the co-founder of this company, has warned the world about the dangers of AI.

I. Lack of explainability and transparency

AI systems, especially deep learning models, can be difficult to understand and interpret. This makes it challenging for humans to trust the decisions made by these systems.

Creating explainable AI (XAI) methods can make AI systems more transparent and easily understood. The goal of XAI techniques is to make AI models easier to understand by giving explanations for their decisions that humans can understand.

II. The concentration of power and monopolies

The development and use of AI technologies can put more power in the hands of a few large tech companies. This could make competing and developing new ideas more challenging for other companies.

Promoting competition, helping smaller AI startups, and implementing reasonable regulations can help make the AI ecosystem more diverse and stop monopolies from forming.

III. Security vulnerabilities

AI systems can be attacked with adversarial examples, carefully made inputs to trick the system into making bad decisions.

Research in AI security can help identify potential vulnerabilities and develop robust countermeasures. This means making more secure algorithms, using the best practices for creating software, and keeping up with the latest security threats.

IV. Privacy concerns

AI systems often need a lot of data to work well, which can make people worry about their privacy and data security.

Implementing AI techniques that protect privacy, like differential privacy, federated learning, and secure multi-party computation, can help protect user data while still letting AI systems work well. To protect user privacy, there should also be strong data protection rules and ethical guidelines in place.

V. Autonomous weapons

When we talk about the dangers of AI, this is something the world is worried about the most. The development of AI-powered weapons that can operate on their own raises ethical concerns about the possibility that humans will lose control in times of war and that an AI arms race could happen.

We must follow the ethical implications of artificial intelligence in defense. There should be international rules, laws, and agreements to control the creation and use of AI-powered autonomous weapons. These efforts can be facilitated through collaboration between governments, international organizations, and AI researchers.

VI. AI alignment and value alignment

AI systems might not share the same values and goals as humans. If their goals are not correctly aligned with those of humans, this could lead to unintended consequences.

The goal of research into AI alignment and value alignment is to make sure that AI systems understand and respect human values. Techniques like inverse reinforcement learning, cooperative inverse reinforcement learning, and reward modeling can help get the goals of humans and AI to work better together.

By addressing these worries through research, collaboration, regulation, and education, we can work to reduce the risks of AI and use it in a responsible way.

VII. The Impact of AI on Employment

Losing employment is one of the risks AI could bring about. Higher-level AI will eventually replace human workers in data processing and customer support. As machines begin to perform tasks that humans once did, this may cause a significant increase in the unemployment rate. But automation powered by AI could also bring about good things, like more productivity and lower costs.

VIII. Preventing Bias in AI Systems

The risk of prejudice is another issue that could arise in AI. AI systems can make mistakes if they aren’t trained on suitable data. They can start to believe things that aren’t true and treat some groups unfairly. A faulty and unjust decision-making process can result from this. Training AI systems on broadly representative data and routinely auditing AI systems for prejudice can reduce the risks of bias.

IX. Mitigating the Misuse of AI

The third possible AI risk is that anyone can abuse this technology. Criminals can utilise AI to harm others and distribute false information. These are only two of the many dangerous applications of AI. The employment of AI-controlled weapon systems that can function independently of human involvement raises ethical concerns and may have unexpected repercussions. Developing stringent security measures and establishing regulations and guidelines to control the use of AI will be essential to reducing the risks associated with its abuse.

“Although AI has the potential to bring about positive change, we must proceed with caution. We must guard against any unethical application of AI. It requires forethought and action to minimize any troubles.”

.@POTUS met with his Council of Advisors on Science and Technology to discuss the risks and opportunities that AI poses.

— The White House (@WhiteHouse) April 4, 2023

This Administration has proposed an AI Bill of Rights to ensure that important protections for the American people are built into AI systems from the start. pic.twitter.com/mfPG3TKMJp

Conclusion

AI could truly alter our society, but we must consider the possible risks associated. There are many myths and risks associated with AI. We must take preventive measures to lessen the risks involved to guarantee the appropriate and ethical advancement and application of AI. To achieve this goal, it may be necessary to retrain workers, increase data diversity, and create rules for the appropriate application of this new information.

FAQs on Dangers of AI

More mature AI will eventually replace human data processing and customer service, workers. Because machines will be doing the work humans used to do, this could significantly increase the unemployment rate. In contrast, automation fueled by AI may lead to gains in efficiency and savings. Helping people whose jobs might be taken over by machines by teaching them new skills and doing other things can make the effects on society less bad.

Because Data teach AI, it might unintentionally absorb and spread bias if the models it uses to learn from are not representative of the population. Training AI systems on varied and expected data and routinely auditing AI systems for discrimination in their decisions can reduce the risk associated with bias.

Cyberattacks and spreading false information are only two examples of potential misuse of AI. The employment of AI-controlled weapon systems that can function independently of human involvement raises ethical concerns and may have unexpected repercussions. Developing stringent security measures and establishing regulations and guidelines to control the use of AI will be essential to reducing the risks associated with its abuse.

Only by being proactive, we can guarantee that AI is used responsibly and ethically. Actions like retraining personnel, offering diverse data, and setting laws and standards to regulate its use are all options for reducing the danger.

Auditing AI decision-making is possible by checking the data, and algorithm and having a transparent decision-making process in place; where the inputs and logic behind a conclusion are available. Later, any stakeholder can question it if necessary. This can boost confidence in AI decision-making.

Do you want to read more? Check out these articles.

6 comments