I. Introduction

How ChatGPT works? How to use ChatGPT? These are two questions everyone is looking for answers to. But what is ChatGPT? A lab called OpenAI created this AI language model and is still working to improve it. This can make chatbots, customer service, and language translation more natural by making responses to text prompts seem more natural. OpenAI ChatGPT uses a deep learning algorithm to look at a lot of data and learn how to respond to text-based questions in a sense-making way. Transformers, a special kind of neural network, are what ChatGPT uses, and they excel at natural language processing (NLP) tasks. ChatGPT can understand and come up with answers for a wide range of topics because it has been trained on books, websites, and other sources of written language.

II. What is GPT?

A. What GPT stands for

Before we get into how ChatGPT works, let’s first define it. ChatGPT works via GPT, which is Generative Pre-trained Transformer. It’s an example of a language model that uses deep learning algorithms to answer questions in the text in a way that makes sense and is consistent. There are certain myths about GPT as well. OpenAI GPT models are made to take in and use knowledge from a tremendous amount of text data so that the output can’t be told apart from content written by a person. Google LaMDA is the finest option for a conversational chatbot for customer service. An OpenAI GPT model, such as ChatGPT, might be a better option if you need an AI chatbot for a question-and-answer platform or research. We have already seen LaMDA vs GPT.

B. Brief history of GPT

In 2018, OpenAI unveiled GPT-1, the first model in a successful series called GPT. This model has achieved state-of-the-art performance on various language tasks after being trained on a large dataset of web pages and books. OpenAI unveiled GPT-2 in 2019, which was introduced on an even larger dataset and could generate text that was nearly indistinguishable from the human-written text. After GPT-2 came out, OpenAI initially limited access to the whole model because they were worried that such complex language models could be abused. Released in 2020, OpenAI’s GPT-3 is a 175-billion-parameter language model that is the most difficult GPT series.

III. How ChatGPT Works

A. ChatGPT’s structure and architecture

ChatGPT’s transformer architecture uses sequence attention mechanisms on each item to process sequential data. This enables it to acquire knowledge from the text data, process patterns within it, and produce complex responses. It also uses unsupervised learning, which lets it create text without being trained in that area first.

OpenAI ChatGPT uses language modeling, which involves making probabilistic predictions about the next word in a sequence based on the words that came before them, to generate responses. With this, it can produce answers that sound natural and well thought out.

B. Difference between ChatGPT and search engine

We have seen ChatGPT vs Google and whether this will replace this search engine. ChatGPT is unlike a traditional search engine because it doesn’t use keywords to find results. Instead, ChatGPT works according to the prompts. Simply put, it creates text based on the context of the conversation. This makes it more human-like and better able to understand subtleties and complexities in language. But search engines are based on keywords, and users often narrow their queries to get valuable results. There are certain ways to use this technology, but this AI model will unlikely replace search engines.

IV. How is OpenAI ChatGPT trained?

A. The Training process

We use unsupervised learning to teach ChatGPT. When a machine learning model is trained on data without human supervision or feedback, the method is called unsupervised learning. For ChatGPT, this means that the model is educated using a massive trove of written materials like books, articles, and online content. The model is then challenged with determining what word or group of words will follow a given set of input words in a sentence. This process is done millions of times so that the model can figure out the themes and connections that keep coming up in the text.

B. The data used to train ChatGPT

OpenAI used books, articles, websites, and other written materials to train ChatGPT. More than 45 terabytes of text data, or more than 570 billion tokens, were used to make this. Before the information was used, it was cleaned by removing any markup or formatting and then filtered to eliminate anything that could be offensive.

V. How does ChatGPT generate responses?

A. Explanation of the generative process

The generative process in OpenAI ChatGPT allows it to provide answers to textual questions. The model is taught to determine what a word or sequence means based on what comes before it in a sentence. ChatGPT works by using this method to predict the following terms in a response based on the words in the prompt. After the model guesses a set of words, it builds a response by putting those words together into a sentence that makes sense.

B. Techniques used by ChatGPT to generate responses

ChatGPT employs several methods to generate answers to textual questions. The model can zero in on the most important words of the prompt and build a response around them using attention mechanisms, which is a crucial technique. Beam search is another method. It lets the model look at several possible answers and choose the one that makes the most sense and is most relevant. OpenAI ChatGPT can make more creative and varied answers by adding some randomness to how responses are made. This technique is called temperature sampling.

VI. How does ChatGPT handle different languages?

A. Multilingual capabilities of OpenAI ChatGPT

ChatGPT can process a wide variety of languages thanks to its multilingual capabilities. OpenAI has trained models for languages like English, Chinese, Spanish, German, and French. To produce results in the target language, these models are trained on text data from that language.

B. How ChatGPT handles different languages

ChatGPT works by using a similar method to how it makes responses in the language it has been trained in, when it does this in a different language. The model must be trained on text data to work in the target language. But it’s important to remember that the amount and quality of text data used to train the model in that language can greatly affect the quality of the response.

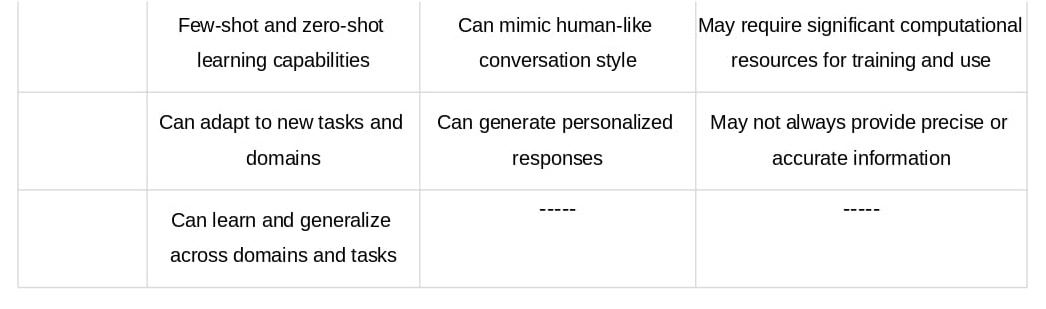

VII. Limitations of ChatGPT

A. ChatGPT’s current limitations

Even though this technology is helpful, it can only partially imitate human speech because of some problems. First, it may not be very objective because it is taught using huge textual datasets that may contain discriminatory language. It could also lead to offensive or inappropriate content, which can be problematic in some places.

Another drawback is that ChatGPT is only sometimes reliable, producing answers that don’t make sense in the given context. Long-form text generation is another area where it may falter because it needs help keeping the big picture in mind.

B. Concerns regarding ChatGPT

Doubts have been raised about how ChatGPT could be used maliciously, especially for spreading misinformation and hate. Its ability to give answers in natural language makes it helpful in writing fake news stories and social media posts that sound real. Its misuse to produce deep fakes, or digitally altered videos and images, is also a growing cause for concern.

Ethical questions are also raised by using ChatGPT in the criminal justice system or making weapons that can work independently. These worries emphasize the need for ethical research and implementation of AI tools like ChatGPT.

Lastly, ChatGPT is a good way to imitate human speech, but some serious problems and safety concerns need to be considered. This should be used with the same level of care and ethics as any other technological tool. This will be beneficial and have fewer negative effects.

VIII. How accurate is ChatGPT?

A. How ChatGPT’s accuracy is measured

Perplexity is often used to measure ChatGPT’s accuracy because it shows how well the model can guess the next word or words in a sentence. A better model can better predict the next word or set of words when the perplexity score is lower.

B. ChatGPT’s accuracy compared to other language models

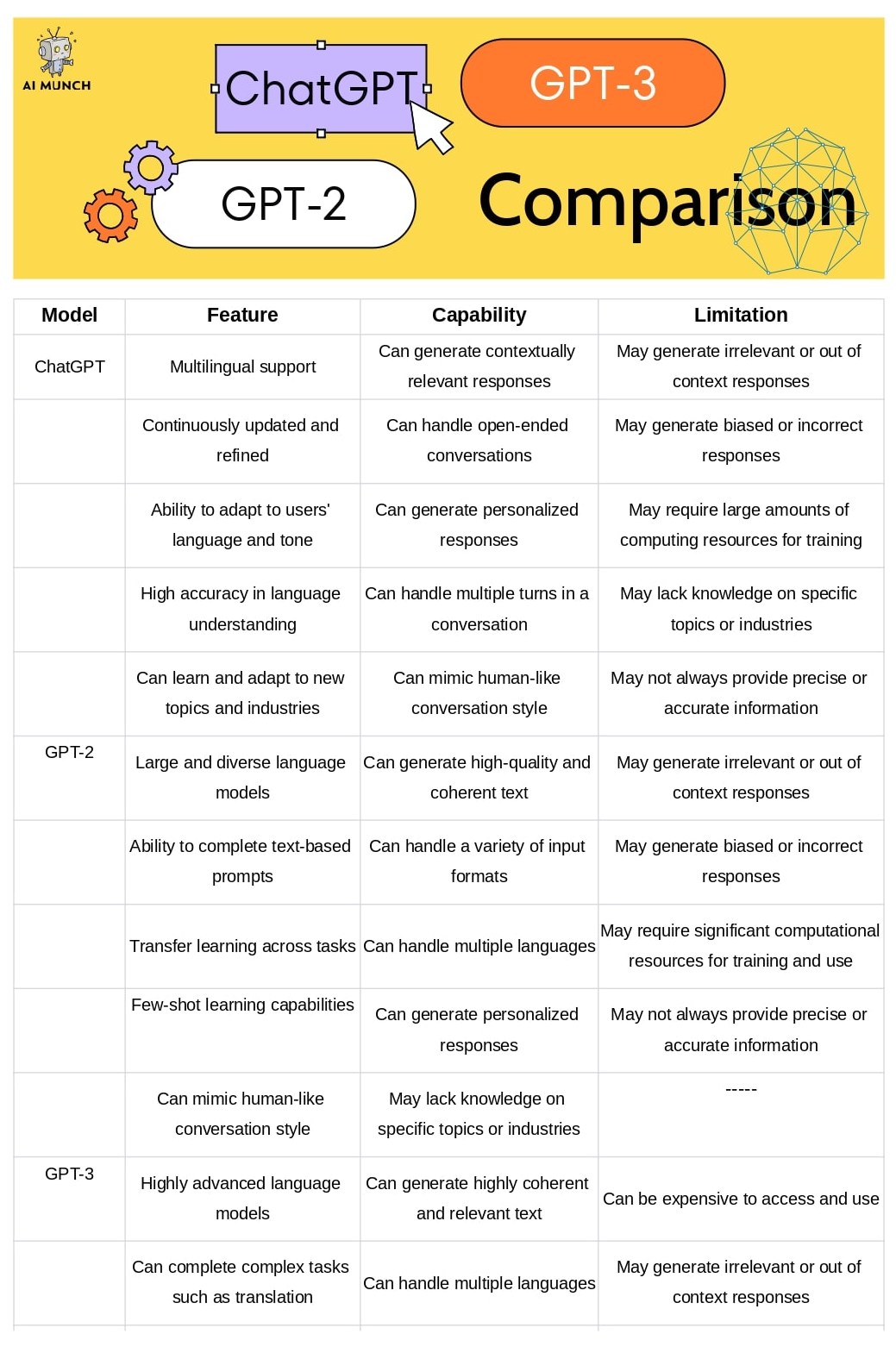

We have already seen the ChatGPT to GPT-2 to GPT-3 comparison table. This is one of the most accurate language models, and its most recent version (GPT-3) did the best of any language model in several tasks. There are some language tasks at which GPT-3 has been shown to outperform humans. However, the quality and quantity of text data used to train the model, and the task at hand can all impact the model’s accuracy.

IX. Conclusion

In this article, we took a deep dive into how ChatGPT works, compared it to GPT-2 and GPT-3, and looked at how it learns from vast amounts of text data through unsupervised learning and how it answers text-based questions. We also talked about the accuracy of ChatGPT, which is typically measured by the perplexity metric, and its ability to translate between multiple languages.

This is a perfect language model that can be used for many things, such as chatbots, customer service, language translation, and creative writing, among other things. Businesses and academics worldwide can benefit significantly from its multilingual capabilities and high accuracy. ChatGPT, like all AI tools, should be used ethically and responsibly to positively impact society with the fewest adverse effects.

FAQs

Data is processed by ChatGPT using a neural network architecture and deep learning methods. It uses a multi-layer transformer model to figure out what text queries are trying to say and give the correct answers.

ChatGPT can be used for many text-based communication tasks, such as customer service, chatbots, conversational agents, and language translation. It’s useful for text summarization, idea generation, and creative writing.

ChatGPT is taught with data from numerous textual interactions and related information. During training, a neural network is fine-tuned to make it better at giving answers that make sense and are consistent.

ChatGPT uses a multi-layer transformer model to figure out what people want and give them the correct answers. It can come up with solutions that make sense and fit the topic at hand in a conversation.

Chatbots can help their owners make money by caring for customers, automating business processes, and getting leads. Some chatbots facilitate e-commerce by letting users purchase without leaving the chat window.

ChatGPT is built on a multi-layer transformer model neural network architecture. The Generative Pretrained Transformer (GPT) algorithm is used. This is an example of an algorithm for unsupervised learning that makes natural language text quickly.

9 comments